So far in the series, we have talked about the Facebook model, its algorithm, its business, and its impact across societies and democracies on the planet. One of the intriguing questions that remains is that of the “how”. How is it such a successful model? In this concluding part of Democracy c/o Facebook, Siddhartha Dasgupta brings up the human user side of the story. Why is Facebook and its model so successful with the human user? This is Part 3 of the series. You can read Part 1 and Part 2 here.

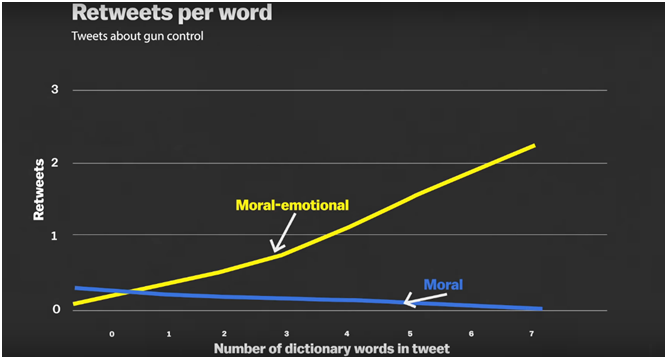

The success of the Facebook business model in engineering society at such a rapid pace and on such a global scale, has intrigued researchers worldwide on the way human mind looks at news and facts and opinions. Jay Van Bavel, a professor at New York University has extensively studied human reactions to social media content. His research found that tweets that contain moral-emotional words like “blame”, “hate” and “shame” were way more likely to be re-tweeted than tweets with comparably neutral language.

This has got to do largely with the human cravings to form strong identities and groups, something that is not new at all, and in fact is a continuation of our tribal past. But what is new is the scale, the reach and the volume of it, in the times of social media. According to researchers like Van Bavel, a significant difference of what is happening currently is the absence of “social checks” when it comes to exchanges on platforms such as Facebook. In the absence of such checks, he claims, generating addictive content is almost synonymous with generating bipartisan, polarising, hate-based content. Social media websites are in effect built on the principle of removing such “social checks”, which is why they attract such large numbers of consumers (users), and then proceed with micro-targeting. What makes it so successful is not just the efficiency of the algorithm, but also the fact that the human users themselves are voluntarily participating in the Facebook algorithm. They are allowed to choose which “groups” to join, which “pages” to like, which pages to block. At the same time, the effect is multiplied by the algorithm. As an experiment, when this author watched an innocuous YouTube travel video on Kashi (Varanasi), the suggested play-list immediately threw up a fake news channel that regularly twists archaeological facts to argue fantastic claims about Hindu mythology.

Together, platforms like Facebook, Instagram, Twitter, YouTube, and their machine algorithms driven by popularity and revenue maximization, have enabled human beings to design their personally curated world-view and dissonance free echo chambers of political opinions, at a scale that couldn’t have been imagined even 20 years back.

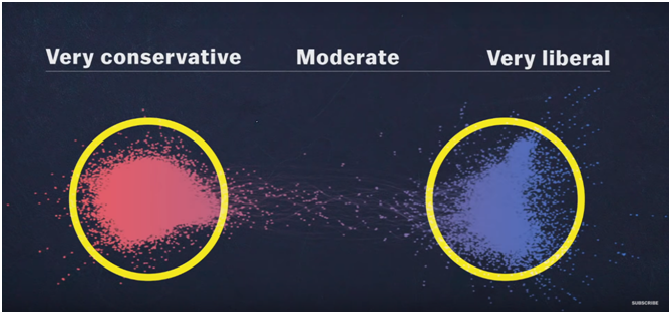

How moral-emotional tweets get shared: The circled parts are what researchers call “positive feedback chambers”, or “dissonance free chambers”, or “echo chambers”. These are people who agree strongly with each other. The space between the two chambers shows the rarity of dialogues between the two sets. This is what Twitter polarisation looks like.

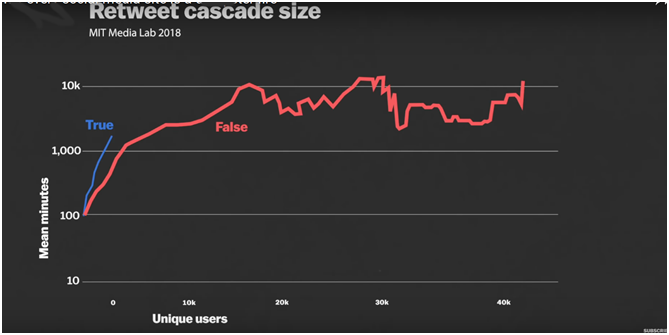

One study looked at the spread of true and false stories on Twitter. After tracing true or false tweets over the 10-year period from 2006 to 2017 and counting the number of unique re-tweets, researchers found that not only did the fake stories reached thousands of people more than the true stories did in real time, but also that the fake stories had a much longer resonating effect. In other words, they would bounce around in the Twitter network for a long time, till much after the true stories had died away, eventually gathering tens of thousands of retweets in excess of what the true stories did during their life span. In other words, fake Twitter stories reached far more people than the true ones.

Faced with growing criticisms, Facebook had to bring down several pages and block several users and fake accounts against whom complaints had been filed. This included Cambridge Analytica. Facebook had to eventually ask CA to destroy all the data it had collected. Later on, Facebook also declared they were going to terminate contracts with other such companies, companies that are called “data brokers”. Hours after the NYT revelations, in a shift from the usual rhetoric of “primacy of free speech”, Mark Zuckerberg announced that Facebook is planning to create an “Internal Supreme Court” to deal with allegations regarding what should qualify for free speech and what should not. So far Facebook has only exercised moderation when it comes to pornographic or overtly violent content. Though Facebook has made claims of using Artificial Intelligence softwares for such tasks, the entire process is far from mechanized and automatic. According to an Al Jazeera report, there are around 150 thousand human content moderators working throughout the world, on salaries as low as 1 dollar per hour, going through 2000 photos per hour (that means they have 1.6 seconds to judge whether to allow a particular image adheres to community rules or not). Thus while content generation in social media platforms is happening at lightning speed using algorithms, the task of moderating that content is still done manually, and that creates an unbridgeable gap between the two. And the gap can only increase over time if this model continues. The question is whether punishing the “bad actors” or exercising more “content moderation” are going to be enough. Many have raised concerns about the eventuality when a private enterprise like Facebook in fact does begin to moderate and regulate its content. In an interview to Frontline, Alex Stamos, Chief Security Officer for Facebook from 2015 to 2018, was heard saying, “These are very very powerful corporations, they do not have any kind of traditional democratic accountability. If we set the norms that these companies need to decide who does or does not have a voice online, eventually that is going to go to a very dark place”.

As the profits keep soaring, Facebook continues to stress that it is not a part of the problem, but a part of the solution. In a note published on November 15 titled “A Blueprint for Content Governance and Enforcement”, he concluded by saying “There is no single solution to these challenges, and these are not problems you ever fully fix. But we can improve our systems over time, as we’ve shown over the last two years. We will continue making progress as we increase the effectiveness of our proactive enforcement and develop a more open, independent, and rigorous policy-making process. And we will continue working to ensure that our services are a positive force for bringing people closer together.”

There are a ton of Facebook internal emails out now from parliament. https://t.co/DlSSlq9WfQ

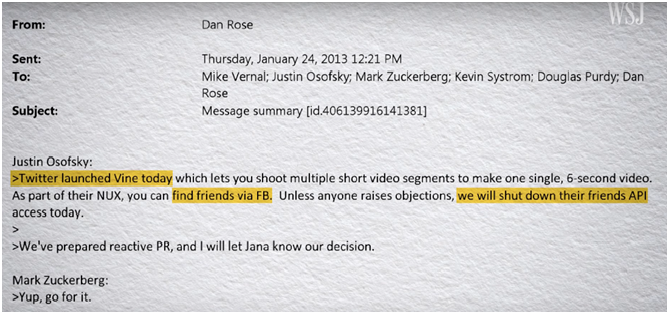

The biggest reveal here isn’t the data privacy stuff— it’s how ruthless Facebook is as a competitor. pic.twitter.com/3QqlYRYDK6— Sarah Frier (@sarahfrier) December 5, 2018

The story of the extent and depth to which Facebook’s involvement in user data infringement goes, is meanwhile far from over. According to the latest damning reports, a British lawmaker has revealed 250 pages of internal email conversations within Facebook, that seem to indicate that Facebook was not only consciously giving away access to user information to third party users, but in fact had a money model based on giving such access. One email shows how Facebook CEO Zuckerberg gave a “go ahead” to the decision of disallowing Twitter-run Vine from using Facebook users’ friend list data.

Another email communication shows how several employees proposed charging money from developers in exchange for allowing access to users’ data. One employee is seen as arguing why not refuse access to such data unless a developer is willing to pay $250K a year. This conversation was dated just before the company went public, around the time when Facebook was exploring newer more radical ways of raising money and attracting advertisers.

In fact, the documents also show that Zuckerberg kept a list of strategic competitors whose access permissions he was to personally review. These platforms and Apps produced by them were not to be allowed usual access “without Mark level sign-off”.

Facebook has responded to these revelations saying that these emails have been taken out of context, and paint a misleading picture. “One of the most important parts of how Facebook works, is we do not sell data. Advertisers do not get access to people’s individual data,” is what Mark Zuckerberg has been saying repeatedly without batting an eyelid. But with every passing day, his claims seem to be becoming more and more untenable. Recent findings, and the observable situation of societies and democracies across the world embattled with the social engineerings of a profit-driven “social” media, has forced us to confront the elephant in the room: “Can Facebook model the way it is, be governed at all?”

We end this series with quoting Zuckerberg himself, as he was addressing the US Senate at his hearing last month, on allegations of meddling with elections. “Facebook is an idealistic and optimistic company. And as Facebook is growing, people everywhere have gotten a powerful new tool for making their voices heard and for building communities and businesses,” Marc Zuckerberg told the Senators, yet again reiterating that Facebook is not only not a part of the problem, but is in fact a part of the solution.